The ultimate guide for lab planning and scheduling digitalization

The landscape of biopharmaceutical laboratory operations has drastically evolved in the last decade. With constrained budgets and accelerated timelines, pharmaceutical companies must optimize resource usage and prevent capacity bottlenecks. This guide aims to help leadership achieve operational excellence through the digitalization of lab planning and scheduling.

In this guide, you’ll discover:

- Why Excel is not cost-efficient for resource management

- How to optimize your QC lab resource planning in six steps

- How to retain your most valuable asset: lab analysts

- How to choose the right lab resource planning &

scheduling software

1. The Future of Pharma QC Labs

Digitalizing the lab is more than just going paperless. It’s about process optimization and efficiency gains.

In one of our user meetings, somebody summarized it beautifully: “the initial goal was to go paperless, but it’s about more than just that. The real value of digitalization is not that it replaces paper. It’s that it should make our processes more fluent, resulting in gains in efficiency and ultimately allow us to develop new medicine faster”.

Why You Need to Move Beyond Paperless

In a continuing effort to reduce costs and increase lab efficiency, many companies are looking to digitalize and automate laboratory processes, e.g. by going into more detail in LIMS and/or ELN. Such extensions can include capturing all method details (with or without a CDMS) and go as far as bidirectional instrument integration. It’s clear such digitalization helps to increase data integrity.

McKinsey published an interesting article on the vast improvement potential they see in pharma QC labs. The article positions three possible horizons for which QC leaders can aim.

McKinsey’s Three Possible Horizons for QC Labs

- Digitally Enabled Labs: Emphasis on connected instruments and advanced planning.

- Automated Labs: Focus on automated testing processes.

- Distributed Quality Control: Real-time testing at the line.

Key benefits

2. Why Excel is Outdated

Many lab managers are becoming some sort of spreadsheet gurus. Though spreadsheets allow a business to set up things fast and they’re extremely flexible, at some point, they mutate into unrelenting monsters that become uncontrollable, produce errors and eat a massive number of working hours. This in turn results into hidden costs for all the 4 following reasons:

- No Single Source of Truth: Multiple teams maintain separate data sources.

- Data Accuracy Issues: Frequent checks and re-checks.

- Changing Requirements and Planners: Excel sheets become outdated and require frequent updates.

- Inaccurate Capacity Planning: Excel is not built for complex “what-if” scenarios.

The Hidden Costs of Excel

The hidden factory cost builds up to $45K/year for maintaining Excel Planning sheets for 5 teams of 10 people.

In Lean Six Sigma the hidden factory is defined as “the extra useful, positive output that would theoretically be possible if the energy directed at creating waste were released”.

We assume that the initial creation of the resource planning sheet is useful. Entering data and evaluating output to prepare planning decisions is considered as useful as well, but not if the same is replicated across all teams. Then of 5 teams, 4 of them end up as waste.

The hidden costs of Excel explained

Read more about our research on the hidden costs of Excel.

3. Six Steps to Optimize Your QC Lab Resource Planning

Demand comes from multiple sources. You should consolidate the most important sources of demand for your QC lab, such as lot release and product stability testing, deviation and outof-spec investigations, method validations and transfers, instrument and facility qualifications or calibrations, regulatory activities, reagent and standards management, and much more. These are the most common ways of consolidating data:

- Automatic capturing: the integration with existing business systems such as supply chain planning, ERP or LIMS applications allows the automatic capture of a large part of the lab demand. As such, demand for release and stability testing is being captured without human intervention.

- Easy upload: transfer the content from existing Excel workbooks.

- Manual data entry and changes in a user interface, to further complete the full picture

Lab supervisors also need to manage the competences and the availability of analysts and technicians. As the team manager, you must keep track of demand to swiftly exchange resources during peak periods and still oversee everything. You need to be able to manage competences and availability.

While qc labs perform tests for different demand channels, modeling test methods to standardize work will help you to focus on the improvements only, not on sub-optimizations. It starts with the definition of, e.g.

- Workload and lead-times

- Preparation, set-up and execution activities

- Campaign/batch sizes

- Instrument capacity constraints (e.g. # of runs per week).

Defining standardized work is a common approach in Lean Six Sigma projects, as a result of value-stream mapping.

After the demand and capacity planning and services standardization work has been completed, you calculate your resource requirements. How easily can you shape endless reports to get answers to these type of questions?

- Which teams can commit available time?

- Which competences should team leaders develop or acquire?

- What instruments have shortfalls?

- How do the value-added versus non-value-added activities compare?

- What is the workload for a specific project?

- Which efficiency-improvement projects have the most impact?

Common practice in QC labs are the “What if?” questions that help you discover possible issues that might occur, and compare different solutions before deciding upon the best option. We discovered a way to play around and calculate the return on investment on scenario questions such as:

- What if you change the campaign/batch size?

- What if you reduce lead times or workload?

- What if demand volume and mix changes occur?

- What will be the impact of new products introductions?

- What if you enlarge the test capacity by training new analysts?

- What if you add extra technicians?

Last but not least: make sure things get done now that the planning has been completed. Providing teams with a comprehensive task list and tracking the work progress is what makes the difference. It will help you feed the performance dashboard with KPIs such as adherence to plan, throughput and foresight.

4. Retain Your Most Valuable Asset: Lab Analysts

With the ever-growing focus on improving lab efficiency and service levels, now more than ever, it’s crucial not to lose sight of your most valuable asset: your people.

The rule of thumb to preserve employee retention is straightforward: amid optimizations, lean lab exercises, increased lab efficiency, better service levels, etc, it’s crucial to keep reminding everyone involved that our people are not machines. Although this might sound self-explanatory, it’s often challenging to find the right balance in a lab context.

For instance how can you optimize your ways of working, within the boundaries of what is possible and comfortable for our people? Here are 4 guidelines to keep in mind when answering that question:

-

People like to know what’s ahead

Aim to implement processes to stabilize the schedule as much as possible (next two weeks) and to avoid last-minute changes.

-

People like to be treated equally

Limit and communicate periods of increased workload for senior staff to prevent burnout and maintain their ability to handle future peaks.

-

People like a variety of work

You want to make sure your people can work on different projects and tasks.

-

People don’t like surprises

It’s worth thinking of ways to reduce ‘fire-fighting’ to a minimum – to make sure you are not putting too much strain on your people, too frequently.

The Binocs algorithm is built around these 4 guidelines

Of course, we wouldn’t be talking about these guidelines without taking them to heart. People are not machines, and that’s also the idea around which Binocs is built.

5. Use Case: Building Your Business Case for Lab Automation

Using automated systems in the lab can save researchers from performing time-consuming and repetitive tasks. This frees them up to carry out more specialized processes. Also, automating laboratory tasks improves the efficiency of experimental processes by speeding up tasks, using lower quantities of reagents, cutting waste and allowing for higher throughput of experiments. This higher efficiency leads to lower running costs for the laboratory.

Sounds great! But before getting ahead of yourself, there are two critical questions to ask yourself before implementing.

Which Lab Activities Should You Automate?

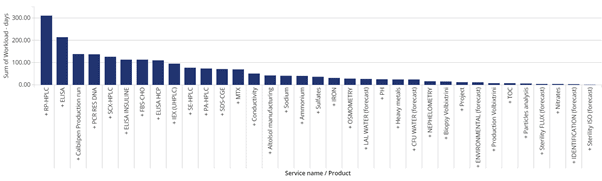

Just like with anything else in life, lab work is subject to Pareto’s 80:20 principle: your lab analysts spend the majority of their time on only a relatively small number of test methods.

- Perform a Pareto analysis.

- Analyze your role-cards / standard work workflow and determine which specific activities within that workflow are ideal candidates for automation.

How Much Efficiency Will You Gain?

- Use What-If simulation capabilities to forecast ROI.

- Measure time saved and productivity improvements.

Calculate your ROI

We created a simple yet powerful calculator that could give you a glimpse at your potential savings.

6. How to Choose the Right Lab Resource Planning & Scheduling Software

The true value of cloud-based solutions lies in using a single, constantly updated version of the software for all clients, ensuring continuous improvements and future updates, unlike customized versions which can become outdated and limited over time.

Some software platforms are like Lego kits, they offer components to build custom solutions, but may fall short for specific, complex challenges; instead, consider niche solutions like Binocs, designed for particular industries and quickly implementable based on best practices and user feedback.

Enterprise software often appeals to buyers with extensive features and configuration but can be cumbersome for daily users; prioritize software solutions designed with end-users in mind, focusing on specific tasks executed exceptionally well rather than broad, superficial capabilities.

When adding new software to laboratories with existing digital systems, ensure it integrates smoothly by evaluating more than just APIs and standard interfaces, considering the time and resource investment needed for coding and future changes.

Conclusion

You now have the knowledge and tools to successfully digitalize your laboratory planning and scheduling operations. Biopharma laboratories who digitalize their lab operations management today will have a competitive advantage tomorrow over those who will start their digitalization process later. You don’t need LIMS, ERP or any other digital tool first to start your journey towards laboratory operational excellence.

Remember, “scheduling automation and optimization can be implemented quickly and start generating significant value even if a lab is not yet mostly paperless and fully digitized“. Start your digitalization journey today to reap significant long-term benefits.

They already digitized their lab planning & scheduling

Discover how Binocs can make your life easier

Book your free discovery tour so we can guide you through the platform and answer any questions you have.